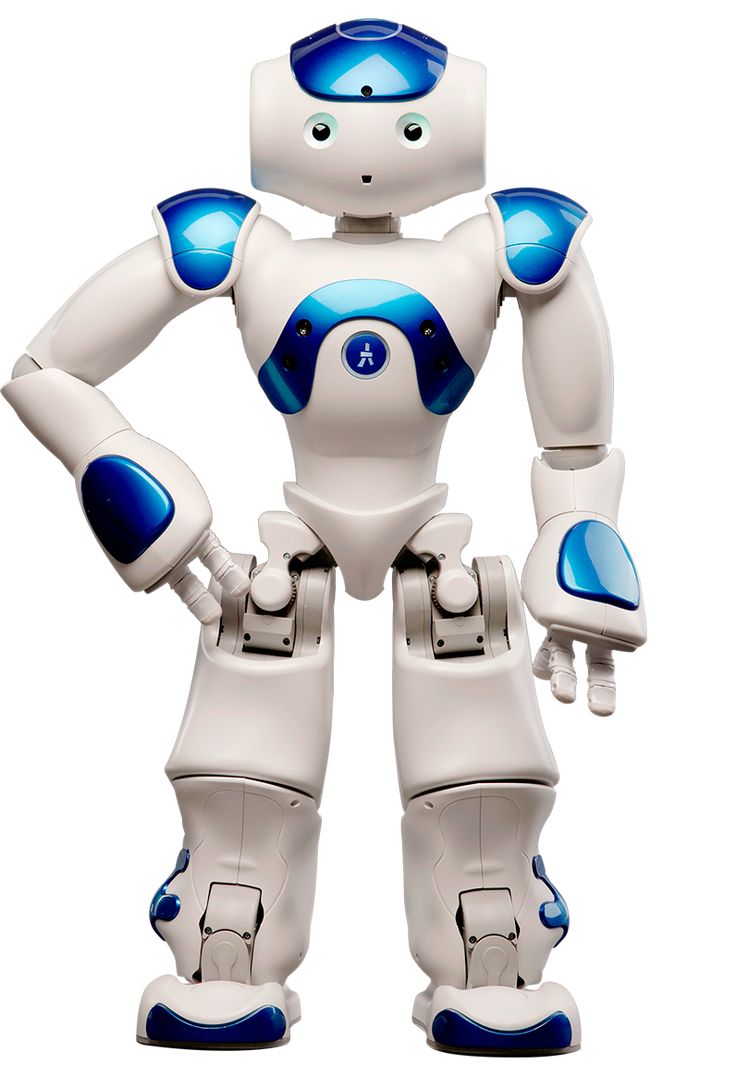

We've all been there - watching a humanoid robot and feeling that peculiar mix of fascination and unease. Something just feels... off. Their expressions, while impressive, often leave us feeling uncomfortable rather than connected. But now, Japanese researchers are tackling this age-old problem with a surprisingly musical approach: they're treating facial expressions like waves.

The breakthrough comes from Osaka University, where scientists have reimagined how robot faces move by breaking down expressions into dynamic waves - think of it like a symphony, where different facial movements play together in harmony. Instead of programming rigid, pre-set expressions, they're letting robot faces flow naturally, synchronized with physical cues like breathing rhythms and heartbeats.

This matters because robots have long struggled with what scientists call the "uncanny valley" - a concept introduced by Japanese roboticist Masahiro Mori back in the 1970s. The idea is both simple and strange: the more human-like a robot becomes, the more its tiny imperfections creep us out. It's why even advanced robots like Ameca or Boston Dynamics' creations, despite their impressive capabilities, still sometimes make people uncomfortable when they try to smile or frown.

"Although our results show an almost instant emotional response, there is still a lot to strive for, especially in terms of the authenticity of the gaze," explains Koichi Osuka, who led the research. The eyes, it turns out, remain a particularly tough nut to crack - they're often described as "windows to the soul," and getting them right in robots is proving to be one of the field's biggest challenges.

The new wave-based approach works by treating emotions as existing on a spectrum from calm to excited. When a robot needs to appear drowsy, for instance, it doesn't just slow down - its entire face responds in concert, with longer blinks and slower breathing patterns. It's like watching a face-orchestra, with each feature playing its part in perfect time.

But some experts are suggesting we might be going about this all wrong. Instead of trying to perfectly mimic human faces, why not explore alternatives? Some propose using changing colors to signal emotions, or developing unique ways for robots to express themselves through sounds or body movements. After all, who says a robot needs to smile exactly like we do to be relatable?

This isn't just about making robots look prettier. A recent study in Frontiers in Psychology found that people feel more comfortable around robots that can express emotions naturally and consistently. It's crucial for a future where humans and robots might work side by side. Imagine trying to collaborate with a colleague whose facial expressions constantly make you uncomfortable - not ideal for productivity!

The implications reach far beyond just making robots look more natural. As we move toward a future where robots might become common fixtures in our daily lives - from healthcare to education to elder care - their ability to interact without causing discomfort becomes crucial. The success of these helpers might well depend on whether they can express themselves in ways that put us at ease rather than on edge.

While the Osaka University team's wave-based approach represents a significant leap forward, they're quick to acknowledge that we're still far from perfect robot expressions. But perhaps that's okay. Maybe the goal isn't to create robots that are indistinguishable from humans, but rather to develop machines that can communicate and interact with us in their own unique, comfortable way.

As we continue this journey toward human-robot coexistence, one thing becomes clear: the future of robot expressions might not lie in perfect imitation, but in finding new ways to bridge the gap between artificial and human emotion. And sometimes, that might mean letting robots be robots, waves and all.