Nvidia has just set a new world record in AI training speed with its powerful Blackwell chips, proving once again why it leads the AI hardware race. The latest MLPerf Training v5.0 benchmark results show that Blackwell is 2.5 times faster than previous models when training massive AI systems like Llama 3.1 405B—one of the largest AI language models today.

What Makes Blackwell So Fast?

Nvidia’s Blackwell architecture is built for next-gen AI, with major upgrades that boost performance:

✔ Liquid-cooled racks – No overheating, just pure speed.

✔ 13.4 TB of memory per rack – Handles huge AI models smoothly.

✔ 5th-gen NVLink & Quantum-2 InfiniBand – Super-fast connections between chips.

✔ Better AI software (NeMo Framework) – Makes training smarter and more efficient.

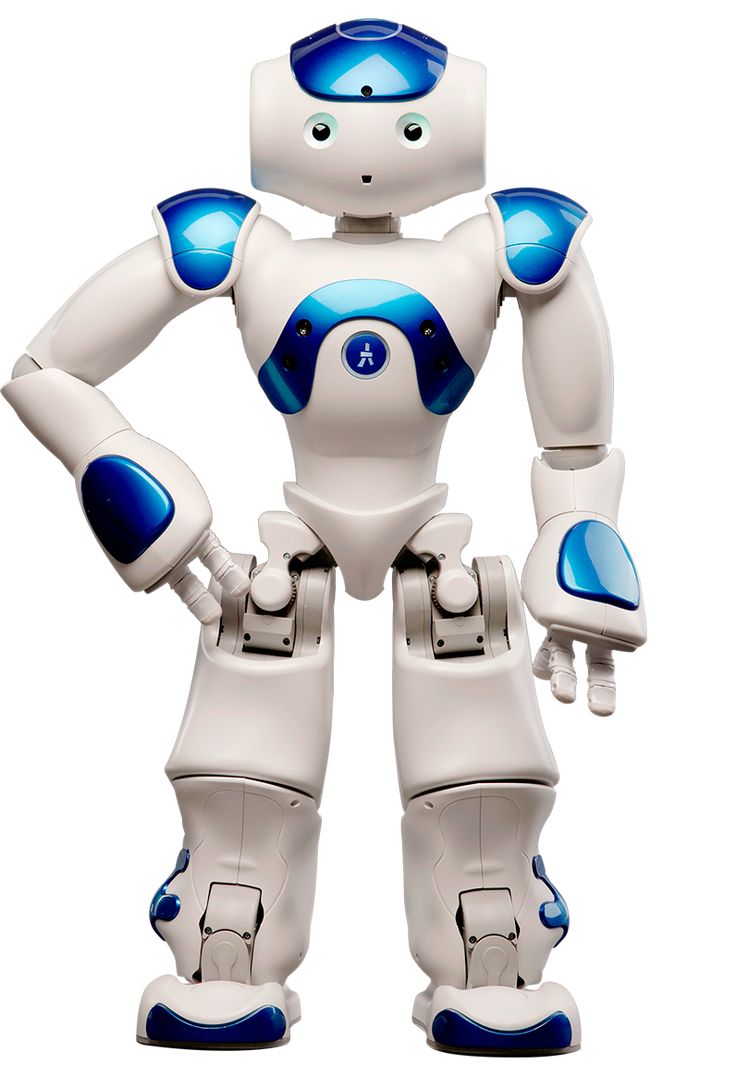

Real-World Impact: AI Factories & Smarter Robots

Blackwell isn’t just about raw speed—it’s paving the way for "AI factories", data centers designed to run advanced AI agents that can reason, solve problems, and generate valuable insights for industries like healthcare, finance, and robotics.

Dave Salvator from Nvidia says:

"This is just the beginning. AI investment starts with training, but the real payoff comes when businesses deploy these models. Blackwell makes that faster than ever."

What’s Next?

Nvidia plans even more optimizations for Blackwell, pushing AI training and deployment to new heights. The company is no longer just a chip maker—it’s building full AI supercomputing systems, from GPUs to entire data centers.

Why Does This Matter?

Faster AI development = Cheaper, smarter apps sooner.

Better AI agents = More useful robots, assistants, and tools.

Nvidia’s dominance continues – Competitors like AMD and Intel have catching up to do.

Will Blackwell make AI too powerful too fast? Or is this the breakthrough we’ve been waiting for? Let us know in the comments!