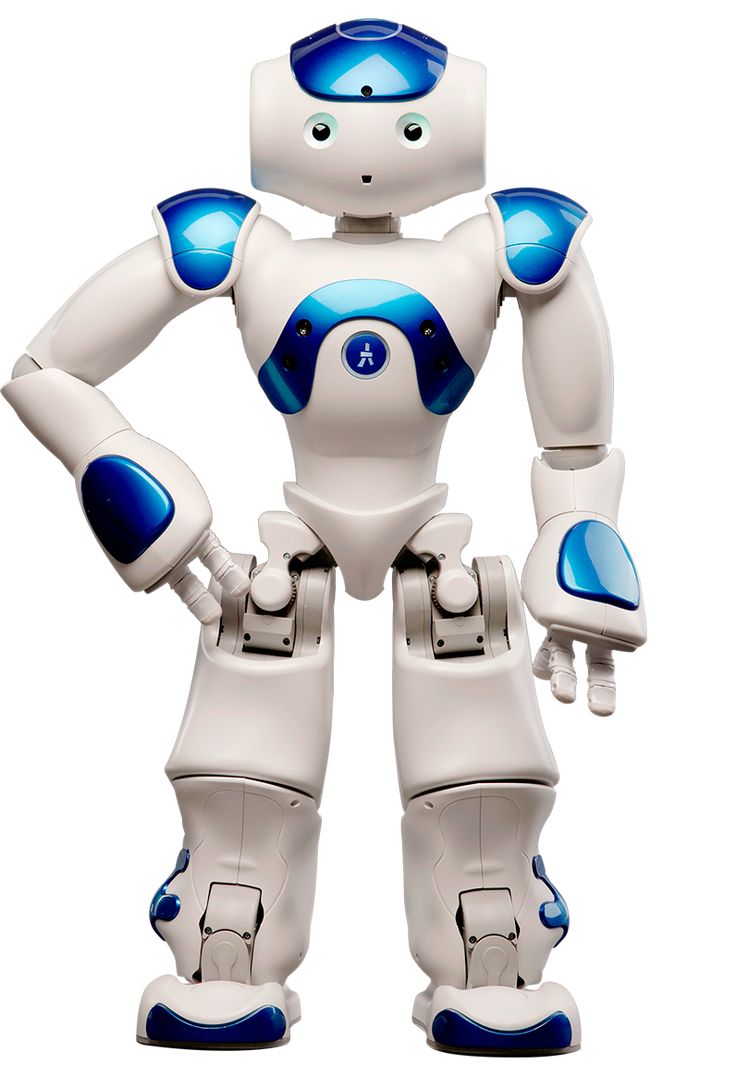

In a significant breakthrough for the field of robotics, researchers at Imperial College London and the Dyson Robot Learning Lab have introduced a groundbreaking method called Render and Diffuse (R&D). This innovative approach aims to revolutionize how robots learn new skills, potentially reducing the need for extensive human demonstrations and improving spatial generalization capabilities.

The R&D method, detailed in a recent paper published on the arXiv preprint server, addresses a long-standing challenge in robotics: efficiently mapping high-dimensional data, such as images from RGB cameras, to goal-oriented robotic actions. This has been a persistent hurdle in teaching robots to tackle new tasks reliably and successfully.

Lead author Vitalis Vosylius, a Ph.D. student at Imperial College London, explained the motivation behind the research: "Our recent paper was driven by the goal of enabling humans to teach robots new skills efficiently, without the need for extensive demonstrations." Vosylius highlighted that existing techniques are often data-intensive and struggle with spatial generalization, performing poorly when objects are positioned differently from the demonstrations.

The Render and Diffuse Method

The R&D method takes inspiration from human learning processes. Unlike robots, humans don't perform extensive calculations when learning manual skills. Instead, they visualize how their hands should move to complete a task effectively. R&D allows robots to do something similar by "imagining" their actions within the image using virtual renders of their own embodiment.

The method consists of two main components:

- Virtual Rendering: The system uses virtual renders of the robot, allowing it to "imagine" its actions in the same way it perceives the environment. This is achieved by rendering the robot in the configuration it would end up in if it were to take certain actions.

- Learned Diffusion Process: A diffusion process iteratively refines these imagined actions, resulting in a sequence of actions the robot needs to take to complete the task.

By representing robot actions and observations together as RGB images, R&D enables robots to learn various tasks with fewer demonstrations and improved spatial generalization capabilities.

Practical Applications and Results

The researchers evaluated their method through a series of simulations and real-world tasks. In simulations, R&D demonstrated improved generalization capabilities of robotic policies. More impressively, the team showcased the method's effectiveness in tackling six everyday tasks using a real robot, including:

- Putting down a toilet seat

- Sweeping a cupboard

- Opening a box

- Placing an apple in a drawer

- Opening a drawer

- Closing a drawer

These practical demonstrations underscore the potential of R&D to simplify the acquisition of new skills for robots while significantly reducing training data requirements.

Implications and Future Directions

The success of the R&D method opens up exciting possibilities for the future of robotics. Vosylius expressed enthusiasm about the increased data efficiency achieved by using virtual renders of the robot to represent its actions. This approach could significantly reduce the labor-intensive process of collecting extensive demonstrations for robot training.

Looking ahead, the researchers see potential in combining their approach with powerful image foundation models trained on massive internet datasets. This integration could allow robots to leverage general knowledge captured by these models while still reasoning about low-level robot actions.

The R&D method represents a significant step forward in simplifying robot skill acquisition. As the field of robotics continues to evolve, approaches like R&D could play a crucial role in developing more adaptable and efficient robotic systems capable of learning and performing a wide range of tasks with minimal human intervention.

As this technology matures, it could have far-reaching implications across various industries, from manufacturing and healthcare to domestic assistance and beyond. The ability to teach robots new skills more efficiently could accelerate the adoption of robotic solutions in diverse applications, potentially transforming how we interact with and utilize robotic systems in our daily lives.