Researchers from Massachusetts Institute of Technology's (MIT) Computer Science and Artificial Intelligence Laboratory (CSAIL) have created a new system called OmniBody that allows robots to learn about their own bodies using only visual information. Based on observations captured by third-party cameras, the robot records its movements and analyzes the recorded frames to build an internal digital representation of its own geometry and mobility.

▌ How OmniBody Works

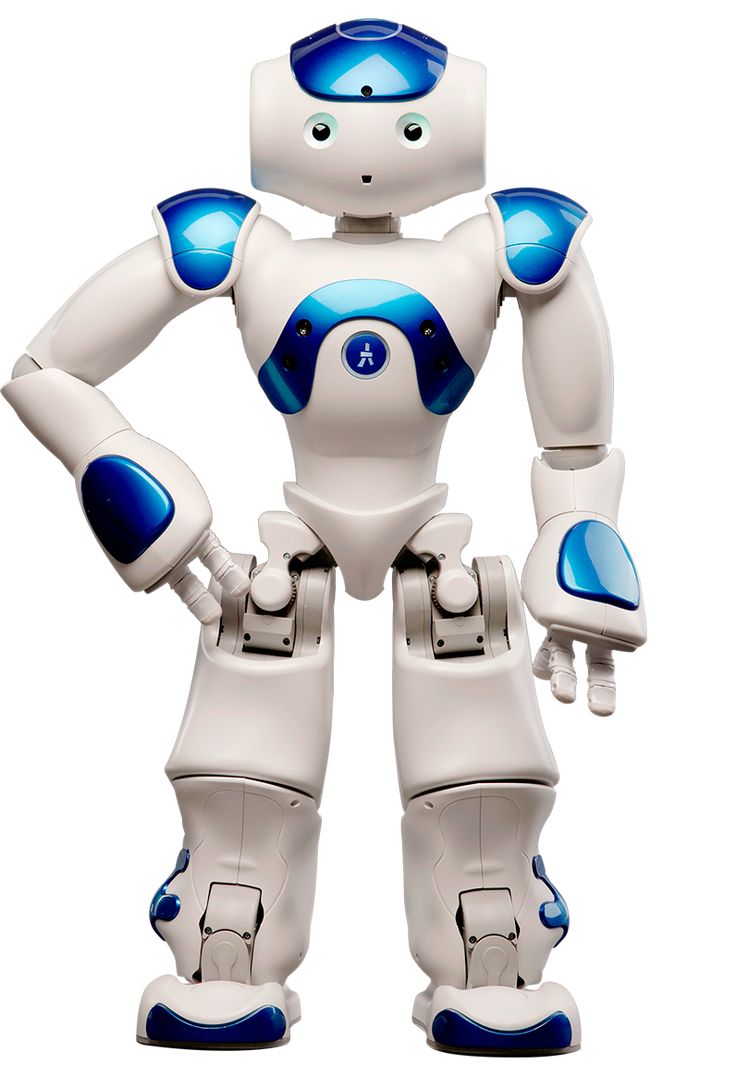

The technology employs a third-person viewpoint, capturing the robot's actions through external cameras. By analyzing the video feed, the robot learns to control itself based purely on visual input and experimentation with its own movement. This paradigm shifts the way robots are designed, freeing engineers from embedding expensive sensors or restricting themselves to simple geometric forms.

▌ Significance of the Discovery

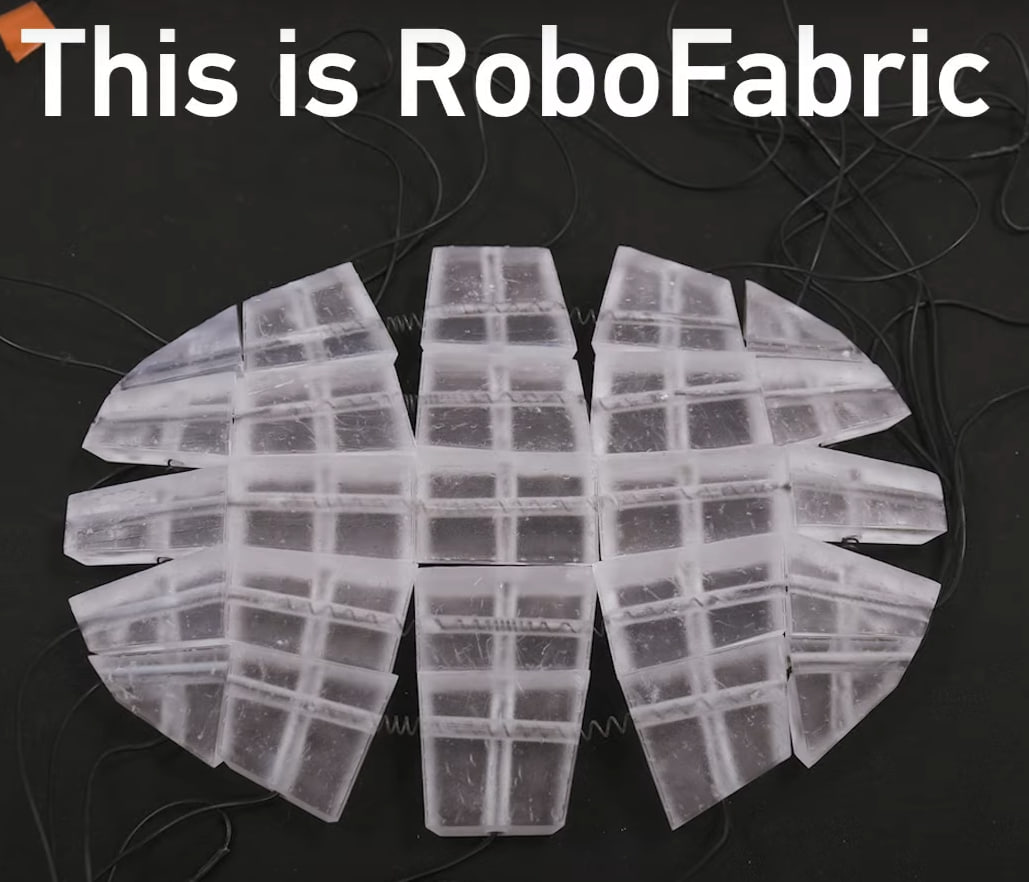

The key advantage of OmniBody lies in its ability to expand the application of soft robots and non-standard-shaped devices. Soft robots are typically hard to control due to unpredictable deformation and difficulty in modeling their movements. OmniBody eliminates this limitation, enabling robots to dynamically adjust to changes in their environment and internal states.

This approach holds tremendous promise for pushing the boundaries of robotics. Current experiments demonstrate that OmniBody works reliably even under complex scenarios, such as hand or leg movements in unusual configurations. This versatility paves the way for inexpensive, versatile robots capable of functioning effectively in chaotic settings, from farming to emergency situations.

▌ Opportunities and Limitations

Scientists acknowledge that the current implementation still requires external cameras and individual calibration for each robot type. Nevertheless, ongoing research seeks to overcome these challenges, aiming to make OmniBody broadly accessible.

Notably, OmniBody transforms robotics from programming to teaching robots to perceive their surroundings and control their movements independently. This transition brings us closer to affordable, user-friendly, and universally applicable robots capable of acting autonomously in diverse contexts.

Although OmniBody currently deals primarily with kinematics, researchers intend to add force perception and tactile sensitivity, making robots even more akin to living beings. The acquired knowledge will enable the creation of effective assistants in daily life, manufacturing, and scientific endeavors, elevating human-robot interaction to unprecedented heights.