In the ever-evolving landscape of robotics, a persistent challenge has long frustrated engineers and researchers: creating robots capable of adapting to diverse tasks and environments. Traditionally, robot training has been a painstaking process involving collecting highly specific data for individual robots and narrow tasks, resulting in machines that struggle to perform outside their initial programming.

A groundbreaking approach from MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL) is poised to revolutionize how we teach machines to learn, drawing inspiration from the remarkable capabilities of large language models like GPT-4.

Breaking the Training Bottleneck

Led by electrical engineering and computer science graduate student Lirui Wang, the research team has developed a novel technique called Heterogeneous Pretrained Transformers (HPT). This innovative method addresses a fundamental problem in robotics: the extreme diversity and fragmentation of training data.

"In robotics, people often claim that we don't have enough training data," Wang explains. "But another big problem is that the data come from so many different domains, modalities, and robot hardware."

The HPT approach is ingenious in its simplicity. By creating a universal "language" that can integrate data from wildly different sources—including simulation environments, human demonstration videos, and various robotic sensor inputs—the system can train robots more efficiently and effectively than ever before.

A Universal Approach to Robot Learning

Traditional robot training typically involves:

- Collecting task-specific data

- Training in controlled environments

- Struggling to adapt to new scenarios

The MIT team's method flips this paradigm on its head. Their transformer-based architecture can:

- Unify data from multiple sources

- Process diverse inputs like camera images, language instructions, and depth maps

- Standardize inputs into a consistent format

- Learn across different robot designs and configurations

Technical Innovation: How HPT Works

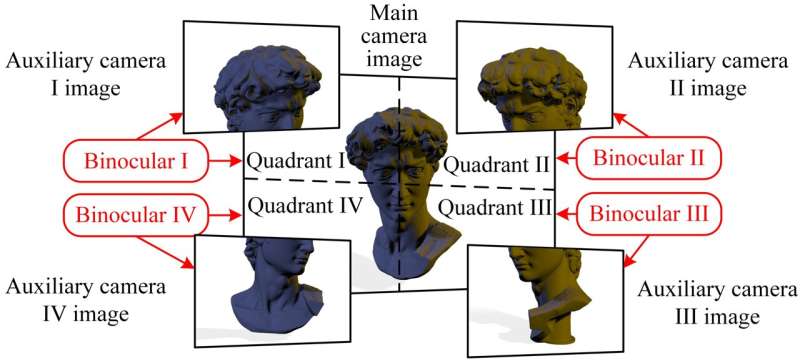

At the heart of HPT is a sophisticated transformer model—the same type of architecture powering advanced language models. This transformer can:

- Align data from vision and proprioception sensors

- Represent all inputs using a fixed number of tokens

- Map inputs into a shared computational space

- Grow more capable as it processes more data

Remarkably, the system requires minimal specific training. Users need only provide basic information about their robot's design and desired task, and HPT can transfer its pre-existing knowledge to learn new skills.

Impressive Performance Gains

In experimental testing, HPT demonstrated extraordinary capabilities:

- Improved robot performance by over 20% in both simulated and real-world environments

- Maintained strong performance even with tasks significantly different from pre-training data

- Reduced the need for extensive, task-specific data collection

The Future of Robotic Intelligence

The researchers' ultimate vision is ambitious: a "universal robot brain" that could be downloaded and immediately operational across different robotic platforms.

David Held, an associate professor at Carnegie Mellon University's Robotics Institute, praised the approach, noting its potential to "significantly scale up the size of datasets that [robotic] learning methods can train on" and quickly adapt to emerging robot designs.

While the current HPT system represents a significant leap forward, the MIT team isn't stopping here. Future research aims to:

- Further explore how data diversity impacts performance

- Develop capabilities for processing unlabeled data

- Continue pushing the boundaries of robotic learning

As robot technologies become increasingly integral to industries ranging from manufacturing to healthcare, innovations like HPT offer a glimpse into a future where machines can learn, adapt, and perform with unprecedented flexibility.

The dream of a truly general-purpose robot is inching closer to reality—one transformed dataset at a time.