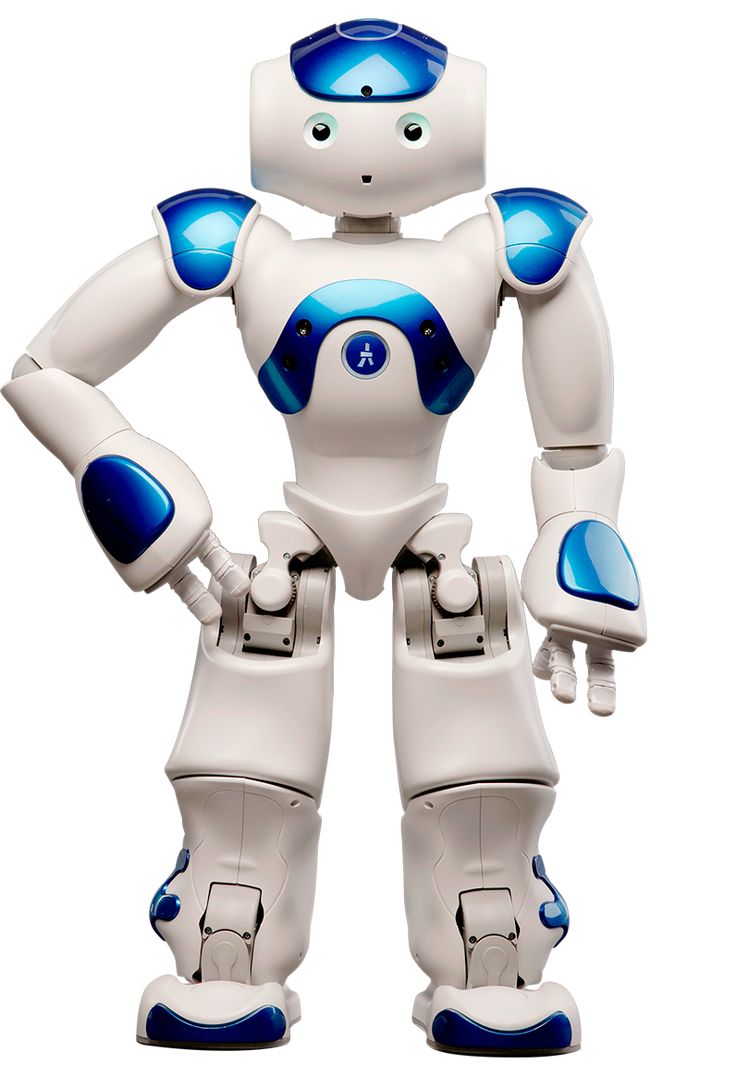

Google's AI division, DeepMind, has created a new way to keep robots safe around humans, claiming that the famous robot laws from science fiction are no longer sufficient for today's advanced machines. Carolina Parada, who leads robotics at Google DeepMind, recently announced that her team has moved beyond the classic "Three Laws of Robotics" written by author Isaac Asimov in the 1940s. Instead, they've created something called the "Asimov Dataset" - a comprehensive database that teaches robots how to behave safely in real-world situations.

Why Old Rules Don't Work Anymore

Asimov's original laws were simple: robots must not harm humans, must obey human orders (unless it conflicts with the first law), and must protect themselves (unless it conflicts with the first two laws). These rules worked well in science fiction stories, but today's robots are far more complex.

"Modern robots don't just follow strict rules - they learn and analyze risks on their own," Parada explained. This means they need more sophisticated guidance than simple do-and-don't commands.

How the New System Works

The Asimov Dataset works more like a smart training manual. It shows robots thousands of scenarios where they need to make safety decisions, along with the best responses for each situation.

For example:

- If a robot sees a glass sitting dangerously close to the edge of a table, it learns to move it to the center where it won't fall

- If it spots an object on the floor where someone might trip, it knows to pick it up and put it somewhere safe

These aren't just theoretical examples. The database includes real incidents that have happened in different countries, giving robots practical experience with actual safety challenges they might face.

What Makes This Different

DeepMind's approach has several key features that set it apart from previous robot safety methods:

Constant Updates: The dataset continuously grows as new situations are discovered. Unlike fixed rules, this system evolves with real-world experience.

Human-AI Partnership: People work alongside AI to review and correct the safety instructions, ensuring that human judgment remains part of the decision-making process.

Open Source: Other robot developers can access and test the dataset, meaning safety improvements can benefit the entire robotics industry, not just Google's robots.

Learning From Real Life

What makes this system particularly powerful is that it's based on actual incidents rather than imagined scenarios. When accidents or near-misses happen involving robots anywhere in the world, that information gets added to the database so all robots can learn from it.

This creates a kind of collective memory where every robot benefits from the experiences of others, similar to how human societies pass down safety knowledge through generations.

The Future of Robot Safety

DeepMind's approach represents a major shift in thinking about robot safety. Instead of trying to predict every possible dangerous situation and write rules for it, they're creating robots that can think through safety problems themselves while still being guided by human values.

As robots become more common in homes, workplaces, and public spaces, this kind of adaptive safety system could be crucial. Rather than waiting for accidents to happen and then writing new rules, robots will be able to spot potential dangers and prevent them proactively.

The science fiction author Isaac Asimov gave us a good starting point for thinking about robot safety, but Google DeepMind's work shows that keeping humans safe in the age of AI requires something much more sophisticated - and much more collaborative between humans and machines.