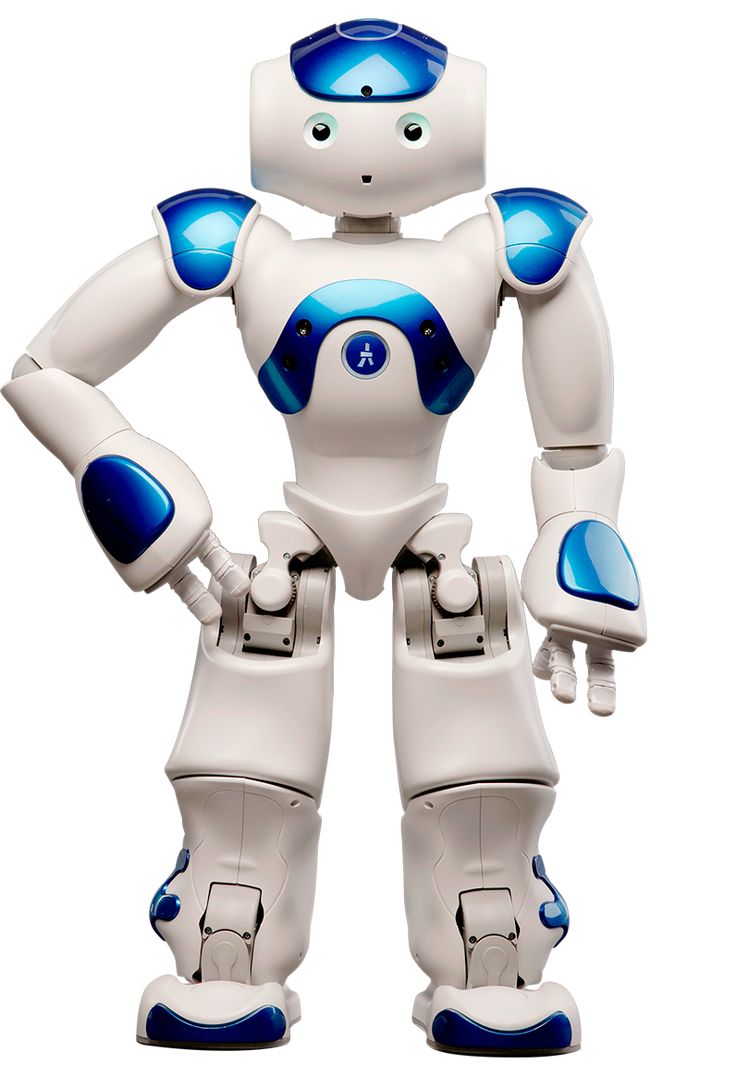

In a breakthrough that could transform how robots interact with their environment, Duke University researchers have developed an innovative robotic hand that quite literally feels its way through the world by listening. Named SonicSense, this cost-effective solution uses sound waves to identify objects, determine their composition, and even count items inside containers—all without relying on traditional visual sensors.

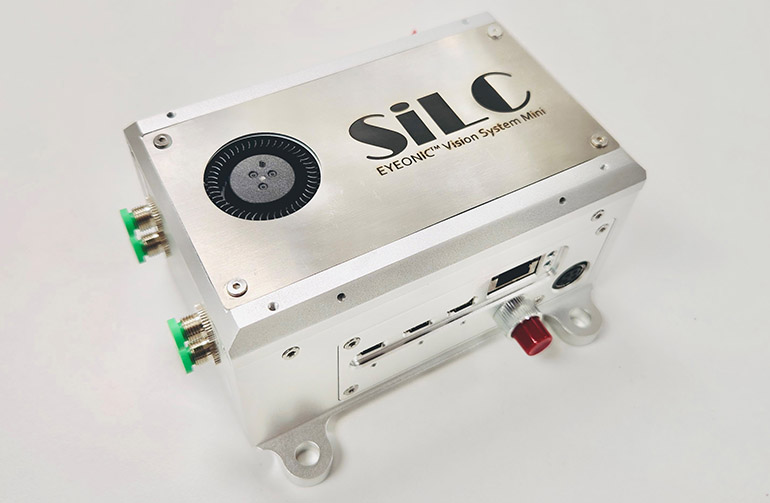

The technology, which costs just over $200 to build, represents a fundamental shift in robotic perception. Unlike conventional robots that primarily depend on cameras and visual processing to understand their surroundings, SonicSense employs contact microphones—the same type musicians use to record acoustic guitars—embedded in each of its four fingertips.

"Traditional robotics has been dominated by visual processing, but that's not how humans exclusively experience the world," explains Jiaxun Liu, the study's lead author and a Ph.D. student at Duke. "We wanted to create something that could work with the complex, diverse objects we encounter daily, giving robots a much richer ability to 'feel' and understand their environment."

The system's capabilities are remarkably human-like. When encountering an object, SonicSense can tap, grasp, or shake it, analyzing the resulting vibrations to build a comprehensive understanding of its properties. This approach proves particularly effective in scenarios where visual information might be misleading or insufficient.

Perhaps most impressively, SonicSense can perform tasks that would challenge even visually-advanced robots. In demonstrations, the system successfully:

- Determined the number and shape of dice hidden inside a closed container

- Measured liquid levels in bottles through simple shaking motions

- Created detailed 3D reconstructions of objects by tapping their surfaces

- Identified materials through their unique vibrational signatures

Professor Boyuan Chen, who leads the research team, emphasizes the system's practical applications: "While vision is essential, sound adds layers of information that can reveal things the eye might miss. This multi-modal approach to perception could be crucial for robots working in complex, real-world environments."

The system's learning process mirrors human experience. When encountering a new object, SonicSense might need up to 20 different interactions to form a complete understanding. However, once an object is familiar, the system can identify it with as few as four interactions—demonstrating a form of mechanical memory that could prove invaluable in real-world applications.

What sets SonicSense apart from other robotic perception systems is its ability to function in noisy, uncontrolled environments. Because the contact microphones only detect vibrations through direct touch, ambient noise doesn't interfere with the system's ability to "hear" objects. This makes it particularly suitable for deployment in dynamic, real-world settings where controlled conditions are impossible to maintain.

The technology's low cost point—achieved through the use of commercially available components and 3D printing—makes it particularly attractive for widespread adoption. "We've demonstrated that sophisticated robotic perception doesn't necessarily require expensive, specialized sensors," Liu notes. "Sometimes, the most elegant solutions are also the most accessible."

Looking ahead, the Duke team is already working on expanding SonicSense's capabilities. Future developments include enhancing the system's ability to handle multiple objects simultaneously and integrating additional sensory inputs like pressure and temperature. These improvements could lead to robots with increasingly sophisticated manipulation skills, capable of performing complex tasks that require nuanced understanding of physical objects.

As robotics continues to evolve, technologies like SonicSense suggest a future where machines don't just see the world—they feel it, hear it, and understand it in ways that more closely mirror human perception. And at a price point of just over $200, that future might be closer than we think.