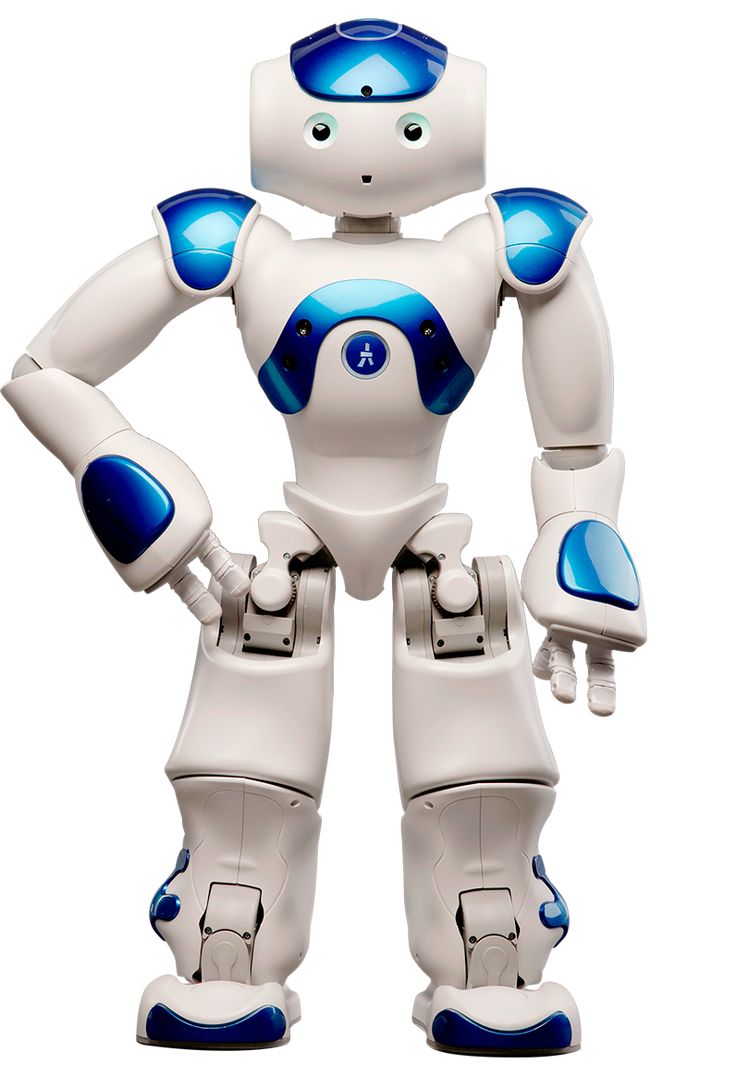

New research from Pennsylvania State University suggests the speed and conversational style of voice assistants like Siri and Alexa impact how people perceive their relationship with the AI. The findings shed light on how to design more effective and trusted digital assistants.

Led by PhD student Brett Christenson, the PSU team conducted three studies examining how changes to an assistant's speech patterns influence user's likelihood to utilize and share information with it. The goal was understanding what factors make people view smart devices more as useful partners versus controlled toys.

In the first study of 753 participants using a budgeting assistant, a moderate speaking pace increased perceived usefulness over slow or fast speeds. The second study showed conversational dialogues mitigated negative impacts of unideal speeds. The third confirmed these results using Amazon's Alexa and also analyzed perceptions of the device's role.

Across studies, moderate tempo voices were most preferred for task completion. Meanwhile, conversation-style interactions boosted confidence in faster and slower assistants. Regardless of speed, most viewed assistants as robotic, but conversational modes made people more likely to see them as partners.

"We endow these digital assistants with personal and human qualities, and this affects how we interact with these devices," says Brett Christenson, the first author of the study. "If it were possible to develop the perfect voice for every consumer, it could be a very useful tool."

The research highlights the importance of vocal pacing and natural back-and-forth for building rapport with voice AI. While assistants are not human, conveying an illusion of partnership through lifelike speech encourages users to trust the technology.

PSU's studies build a framework for designing more relatable assistants. Factors like moderate cadence and conversational engagement make people more receptive to relying on AI. This could shape voice user interfaces that feel cooperative rather than controlling.

"The reason we did three different studies is to establish the status quo and then develop it by adding one element at a time, and thus get reproducible results," Christenson said. - In the first, second and third studies, we got the same results regarding people's reactions to slow and fast, as well as moderate voice. We found that people really like a moderate pace."

With voice technology proliferating, these insights will help developers craft assistants that form productive connections with users. More human-like speech patterns demonstrate how AI can build trust through natural interaction.