Researchers from the Georgia Institute of Technology have developed a novel technique that allows robots to detect and locate nearby humans using barely perceptible sounds emitted while moving. Published in a paper titled "The Un-Kidnappable Robot: Acoustic Localization of Sneaking People" on arXiv, this method offers an alternative to traditional computer vision for human localization that is more scalable, less intrusive and can be applied to diverse robotic systems.

Led by Ph.D. student Mengyu Yang, the Georgia Tech team created a machine learning model trained on a new dataset called the Robot Kidnapper dataset. This dataset contains 14 hours of high-fidelity four-channel audio paired with video recordings captured while people moved around a robot in different ways. By training on this varied acoustic data, the model learned to pinpoint human locations based on subtle sounds from footsteps, clothing and more, while filtering out irrelevant noises like HVAC systems.

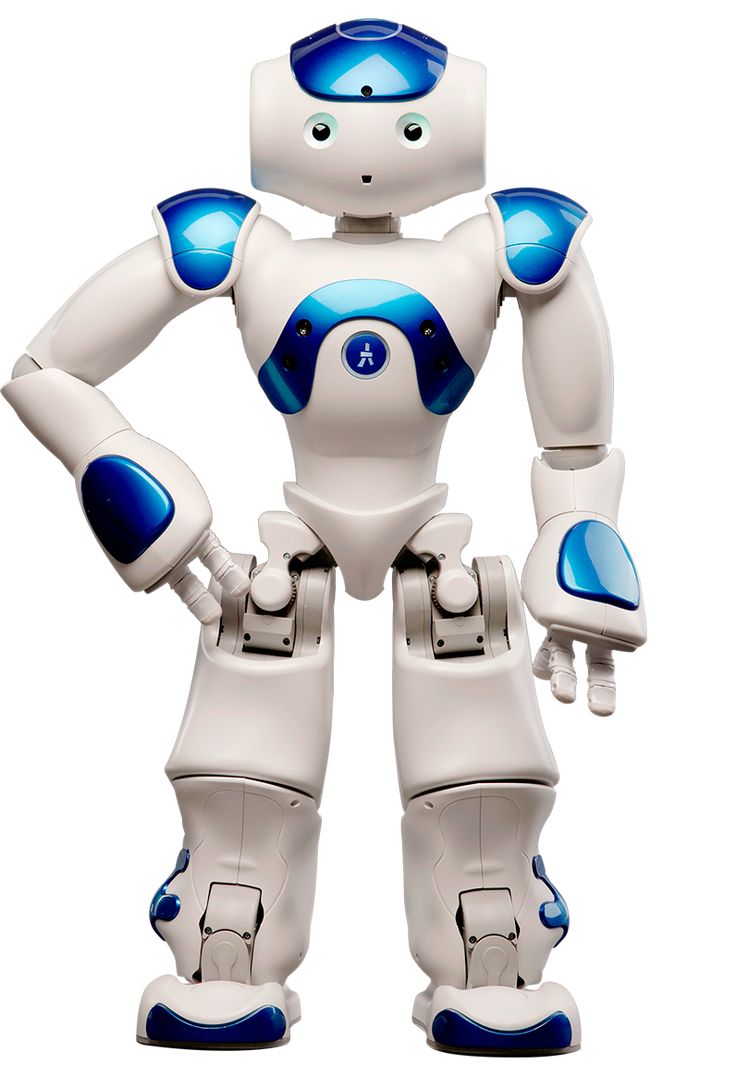

In initial tests on the Hello Robot Stretch RE-1, the acoustic localization technique proved twice as accurate as other methods, successfully detecting nearby humans solely through sounds from walking. The approach is highly scalable, eliminating the need for cameras or other sensors. It is also less intrusive than vision-based techniques and helps preserve user privacy.

“Recently, our group has been interested in studying what types of ‘hidden’ information are freely available that we can train models on,†says Yang. “Often in robotics, acoustic detection of a person requires loud noises like talking or clapping. We wanted to see if the unintentional sounds of a person moving could become that signal.â€

The researchers note that the current method only detects moving humans but hope to expand it to locate stationary people in future work. This could further improve safety for collaborative robots designed to interact closely with humans. The team also believes the technique could inspire new machine learning applications in acoustics and robotics, as well as security-related fields.

By uncovering subtle audio cues emitted naturally by the human body, the Georgia Tech researchers have demonstrated a promising new technique for non-visually localizing people. Their work shows the feasibility of leveraging machine learning to exploit ambient sounds for robotic perception and could lead to smarter, more perceptive robots capable of safely sharing spaces with humans.